IBM Granite 3.2 introduces reasoning AI with a toggle feature, a 2B vision model for document understanding, and daily to weekly forecasting. How does Granite 3.2 compare with industry leaders like GPT-4o and Claude 3.5 Sonnet?

IBM introduced Granite 3.2, the latest version of its AI model family, designed to deliver enhanced reasoning capabilities, document understanding, forecasting improvements, and better AI safety measures.

With Granite 3.2, IBM aims to provide businesses with AI models that are not only powerful but also smaller and more efficient than many competitors. The models come open-source under the Apache 2.0 license, making them easily accessible and customisable for various enterprise applications.

Granite 3.2 models are available on IBM watsonx.ai, Hugging Face, Ollama, LMStudio, and Replicate, giving developers and businesses a range of options for deployment. The update builds upon Granite 3.1 by introducing significant improvements in reasoning, multimodal AI (vision), time-series forecasting, embeddings, and AI safety. IBM’s goal is to create smaller, high-performance AI models that can outperform larger alternatives while reducing computational costs.

The Granite series: IBM’s innovation in AI development

IBM’s Granite series exemplifies the company’s commitment to developing practical and high-performing AI models that balance efficiency, reasoning power, and safety. With each iteration, IBM has refined its AI technology to better meet enterprise needs.

Granite 1.0 (Introduced in Oct 2023)

Granite 1.0 marked IBM’s entry into the competitive landscape of general-purpose language models. This foundational version was designed to handle a wide range of AI tasks, including natural language processing (NLP), text generation, summarisation, and basic question-answering. Key features included:

- General-purpose AI capabilities: Granite 1.0 was trained on diverse datasets to ensure versatility across industries and use cases.

- Scalability: It was optimised to run on various hardware setups, from cloud servers to on-premise systems, making it accessible to businesses of all sizes.

- Focus on accuracy and reliability: The model prioritised delivering accurate and contextually relevant responses, laying the groundwork for future iterations.

- Ethical AI practices: IBM integrated safeguards to mitigate biases and ensure responsible AI usage, aligning with its commitment to ethical AI development.

Granite 2.0 (Released in Feb 2024)

Building on the success of Granite 1.0, Granite 2.0 introduced significant improvements in instruction-following and task execution. This version was designed to better understand and respond to complex, multi-step prompts, making it more useful for advanced applications. Key advancements included:

- Enhanced instruction-following: Granite 2.0 could interpret nuanced instructions and execute tasks with greater precision, such as generating detailed reports, coding assistance, or creating structured data outputs.

- Improved contextual understanding: The model demonstrated a deeper grasp of context, allowing it to maintain coherence over longer conversations or documents.

- Fine-tuning capabilities: Businesses could fine-tune the model for specific industries or use cases, such as legal document analysis, healthcare diagnostics, or customer support.

- Performance optimisation: Granite 2.0 was more efficient, reducing latency and improving response times, even for computationally intensive tasks.

Granite 3.0 (Launched in Oct 2024)

Granite 3.0 represented a shift toward enterprise-focused AI applications, emphasising efficiency, cost-effectiveness, and scalability. This version was tailored to meet the growing demand for AI solutions that could handle large-scale operations without excessive computational overhead. Key features included:

- Enterprise-grade optimisation: Granite 3.0 was designed to operate efficiently in resource-constrained environments, reducing the computational costs associated with running large language models.

- Specialised models: IBM introduced domain-specific variants of Granite 3.0, such as models optimised for finance, healthcare, and supply chain management.

- Enhanced security and compliance: The model incorporated advanced security features to ensure data privacy and compliance with industry regulations, such as GDPR and HIPAA.

- Integration with IBM ecosystem: Granite 3.0 seamlessly integrated with IBM’s existing suite of enterprise tools, such as Watson, Red Hat, and Cloud Pak, enabling businesses to leverage AI across their entire infrastructure.

Key features of IBM Granite 3.2

IBM’s Granite 3.2 introduces a range of improvements, ensuring high performance with reduced computational requirements. Each of these features represents a major step forward in IBM’s strategy to provide open, trustworthy, and cost-effective AI models for businesses. Here are the key highlights:

- Granite 3.2 Instruct – Introduces reasoning capabilities that can be turned on or off, improving the model’s ability to follow complex instructions without increasing resource usage.

- Granite Vision 3.2 2B – A multimodal AI model designed for document understanding, performing at the level of models five times its size.

- Granite Guardian 3.2 – A more efficient and smaller AI safety model that reduces inference costs while maintaining robust safety checks.

- Granite Timeseries Models – Expands forecasting capabilities to include daily and weekly predictions, enhancing business intelligence applications.

- Granite Embedding – A new sparse embedding model that improves search and ranking efficiency, making AI applications faster and more interpretable.

Granite 3.2 instruct: Smarter reasoning for complex tasks

IBM’s Granite 3.2 Instruct models, available in 8B and 2B parameter versions, introduce enhanced reasoning capabilities, enabling step-by-step logical processing. This innovation improves performance in mathematics, coding, and complex workflows, making the models more efficient in handling intricate tasks. IBM has integrated reasoning features directly into the core Instruct models, avoiding the need for separate “reasoning models” and ensuring seamless application across various domains.

IBM highlights an example of DeepSeek-R1, a popular reasoning model, taking 50.9 seconds just to answer the simple question, “Where is Rome?”.

IBM’s approach uses Thought Preference Optimisation (TPO), a reinforcement learning technique that enhances reasoning without degrading the model’s general abilities. This allows Granite 3.2 to outperform models like DeepSeek-R1, which often struggle outside specific reasoning tasks.

Toggleable reasoning on and off

One of Granite 3.2’s key innovations is the ability to toggle reasoning on or off. This feature provides users with control over computational resources, allowing them to activate reasoning for complex tasks and disable it for quicker responses.

Users can enable or disable reasoning with a simple API toggle:

- “thinking”:true → Enables advanced reasoning for complex tasks.

- “thinking”:false → Disables reasoning for faster processing.

IBM’s flexible reasoning system ensures that businesses can use extended logical analysis when needed while avoiding unnecessary computational costs.

Performance gains without performance Trade-offs

Many AI models that focus on reasoning sacrifice general performance in other domains. However, Granite 3.2 Instruct is designed to maintain high performance across various tasks, including:

- Mathematical and logical reasoning (AIME, MATH-500)

- Instruction following (ArenaHard, Alpaca-Eval-2)

- Adversarial safety benchmarks (AttaQ)

Outperforming larger AI models in reasoning

IBM has demonstrated that Granite 3.2 8B Instruct can match or exceed the mathematical reasoning capabilities of larger models, including OpenAI’s GPT-4o, Anthropic’s Claude 3.5 Sonnet, and DeepSeek-R1-Distill. This performance is achieved through inference scaling techniques, which optimise computational efficiency without relying on parameter scaling.

Instead of increasing model size, Granite 3.2 applies inference strategies to deliver accurate reasoning while managing resource use. This allows businesses and developers to use AI reasoning without high compute costs, making Granite 3.2 suitable for enterprise applications.

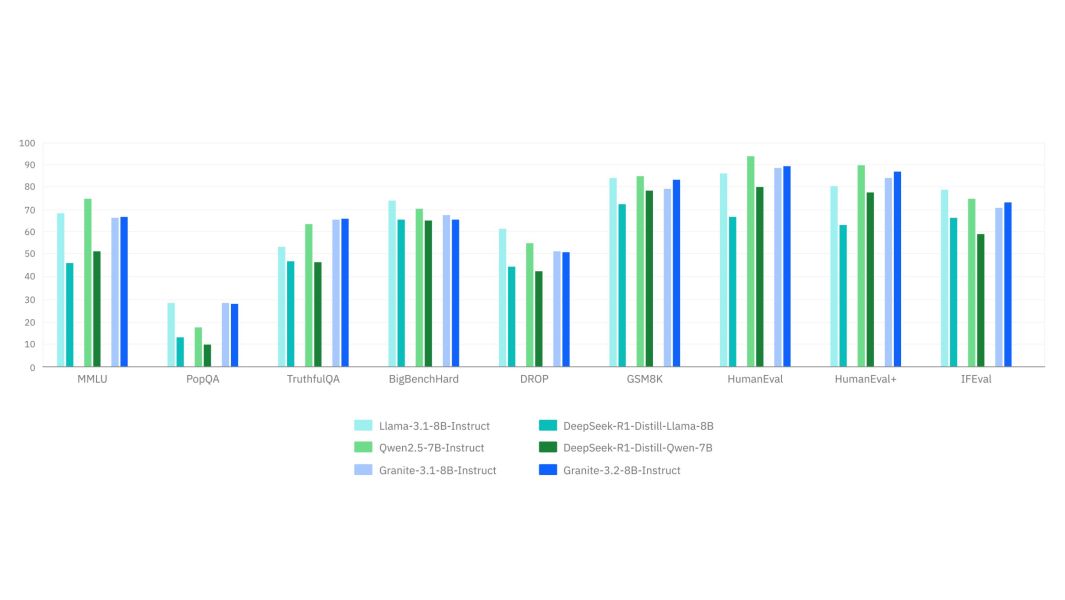

Granite 3.2 vs. DeepSeek-R1 performance comparison:

-

IBM Granite 3.2, Image credit: IBM Granite 3.2 Instruct (with reasoning enabled) outperforms DeepSeek-R1 models on instruction-following benchmarks like ArenaHard and Alpaca-Eval-2.

- Unlike DeepSeek-R1-Distill, Granite 3.2 does not suffer from safety performance drops, ensuring reliability in enterprise applications.

- Granite 3.2 remains robust against adversarial attacks. DeepSeek-R1 models, however, show a significant drop in safety performance (AttaQ benchmark).

IBM’s reasoning approach is based on research from 2022–2024, showing that scaling inference-time computation improves AI reasoning. It uses chain-of-thought prompting, reinforcement learning (TPO), and inference scaling techniques to enhance logical processing. These methods enable Granite 3.2 Instruct to deliver effective reasoning with optimised resource use. The model ensures enterprise-grade performance with cost control, speed, and broad applicability.

Granite vision 3.2 2B: Bringing AI to document understanding

One of the most exciting additions to IBM’s AI suite is Granite Vision 3.2 2B, a multimodal model that combines text and image processing. Unlike many vision models that focus on natural images, Granite Vision is specialised for document understanding.

Why Focus on Documents?

Most AI vision models struggle with documents, charts, and infographics, which require layout parsing, text extraction, and key-value association. IBM addresses this with Granite Vision 3.2, which has been trained extensively for:

- Document Question Answering (DocVQA)

- Chart Interpretation (ChartQA)

- Text Recognition (OCRBench)

- Diagram and UI Understanding

The DocFM Dataset: A Breakthrough in Document AI

IBM has developed DocFM, a new dataset tailored for document intelligence. This includes:

- 85 million PDFs processed using IBM’s Docling toolkit.

- 26 million synthetic question-answer pairs created to improve document analysis accuracy.

Performance Comparison

- Granite Vision 3.2 matches or outperforms larger models like Llama 3.2 11B and Pixtral 12B on document-related tasks.

- The model provides OCR-free document understanding, reducing error accumulation from external OCR systems.

Sparse attention for safety monitoring

IBM has integrated sparse attention vectors into Granite Vision 3.2 to detect harmful inputs without needing external guardrails. This means safer AI deployments for enterprise users.

Granite Guardian 3.2: More efficient AI safety

The Granite Guardian 3.2 series provides advanced risk detection in AI interactions, ensuring enterprise compliance and security.

Granite Guardian 3.2 new features:

- Verbalised Confidence: Instead of a simple “Yes/No” risk assessment, Granite Guardian now indicates “High” or “Low” confidence, helping businesses understand safety decisions better.

- Slimmer Models: The new Granite Guardian 3.2 5B model is 30% smaller than previous versions but maintains similar safety performance.

- Granite Guardian 3.2 3B-A800M: A lightweight safety model that uses Mixture of Experts (MoE) for cost-effective risk monitoring.

IBM’s approach ensures Granite Guardian 3.2 models do not suffer from the performance degradation seen in models like DeepSeek-R1-Distill. Instead, Granite’s models maintain high accuracy and robustness against adversarial attacks.

Granite Timeseries models: Expanding forecasting horizons

IBM’s Granite Timeseries models, also known as Tiny Time Mixers (TTMs), have been widely adopted for financial forecasting, supply chain management, and economic predictions.

Key Updates in TTM-R2.1:

- New daily and weekly forecasting capabilities, in addition to previous minutely and hourly predictions.

- Outperforms Google’s TimesFM-2.0 and Amazon’s Chronos-Bolt-Base on forecasting benchmarks.

- Compact models (1M–5M parameters) outperform much larger models (500M+ parameters), making them ideal for cost-efficient forecasting.

Granite Embedding: Sparse Embeddings for Efficient Search

The Granite Embedding 30M Sparse model introduces sparse embedding techniques, making AI-powered search faster and more interpretable.

Why Sparse Embeddings Matter?

- Traditional dense embeddings require high computational power and lack interpretability.

- Sparse embeddings assign weights to words/tokens in a document, making search and ranking more precise and faster.

Performance Gains

- Granite-Embedding-30M-Sparse performs as well as dense embeddings on BEIR benchmarks while being more efficient.

- Ideal for keyword searches, ranking systems, and enterprise search engines.

Final Thoughts

IBM Granite 3.2 represents a significant step forward in AI, offering high performance in smaller models, advanced reasoning, document intelligence, and cost-effective forecasting.

With a focus on transparency, safety, and efficiency, IBM is delivering AI solutions that enterprises can trust—all while keeping models open-source and accessible.

Granite 3.2 is now available on IBM watsonx.ai, Hugging Face, Ollama, LMStudio, and Replicate.

IBM is proving that AI doesn’t have to be massive to be powerful—Granite 3.2 is lean, smart, and enterprise-ready.

Pallavi Singal is the Vice President of Content at ztudium, where she leads innovative content strategies and oversees the development of high-impact editorial initiatives. With a strong background in digital media and a passion for storytelling, Pallavi plays a pivotal role in scaling the content operations for ztudium’s platforms, including Businessabc, Citiesabc, and IntelligentHQ, Wisdomia.ai, MStores, and many others. Her expertise spans content creation, SEO, and digital marketing, driving engagement and growth across multiple channels. Pallavi’s work is characterised by a keen insight into emerging trends in business, technologies like AI, blockchain, metaverse and others, and society, making her a trusted voice in the industry.